- Docker and kubernetes architecture install#

- Docker and kubernetes architecture update#

- Docker and kubernetes architecture code#

- Docker and kubernetes architecture free#

Now that we've proven that the Docker containers work, we can begin to port these components to Kubernetes. You can then delete the network as explained previously.

Once you've done this, you can rebuild the frontend with ' mvn clean install', then start the container like so: Usually you'd replace this with the domain name for your website API, but in this case we'll just change it to ' since that's where the Docker container will be hosted. This will determine the URL of the backend service to be called by the user. You'll need to edit the variable found in the file ' frontend/.env.production'.

Docker and kubernetes architecture code#

Because of this, where the Payara Micro service can override the required environment variables, the JS code requires that it be specified for the maven build. It's at this time that all environment variables should be inserted into the compiled code. Amongst other things this will disable the dynamic reloading, disable the verbose logging and generally make the code ready for production. As part of the Maven build, the command npm run build will be run which will generate the static files to be served to the user. This one runs slightly differently to the other containers in that it serves compiled JS code to the client via Nginx, so must be compiled beforehand. The last container is the frontend container. This is the final bit that allows the backend to find the name service and communicate with it. The second new bit is ' -env NAME_SERVICE_URL= You'll recall from before that this service allows the name service URL to be overriden by this environment variable. This will also be solved when we start using Kubernetes. The reason for this is that JS on the web page will need to be able to access the backend service, and the easiest way to do this is to host the backend on localhost. In this case, it's mapping port 8080 of the container (the one used by Payara Micro) to port 8081 on the host machine. This is the Docker argument to map a container port to a localhost port. There are two additional arguments here that you'll notice. On Ubuntu, Snap can be installed like so:

Docker and kubernetes architecture update#

Since MicroK8s was developed by the Kubernetes team at Canonical, it must be installed using the Canonical Linux package manager - a package manager that can be across many Linux operating system and will make sure applications are installed the same way, as well as automatically update them.

Docker and kubernetes architecture install#

For the ease of install and use, this blog will be using MicroK8s. Whereas minikube runs a Kubernetes cluster inside a single VM, MicroK8s is installed using Snap and runs locally with minimal overhead. If you're using Linux, two forerunners are MicroK8s and minikube, the latter of which works on Windows, MacOS and Linux. If you're running Windows, Docker-For-Windows has support for Kubernetes.

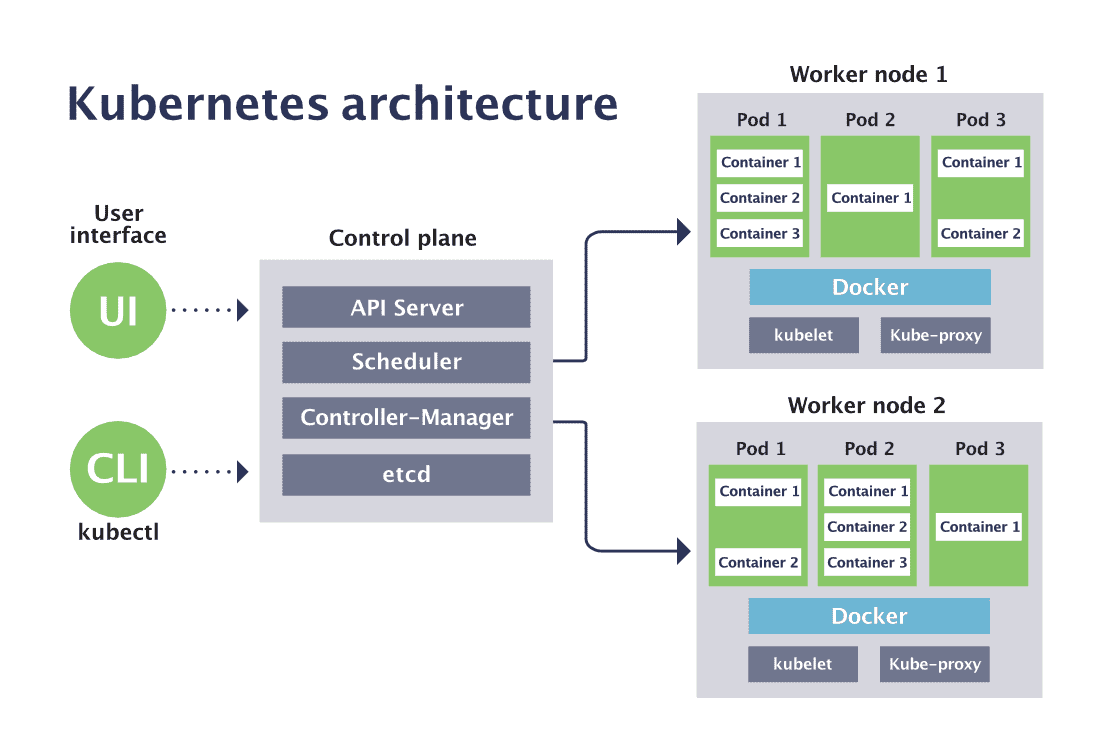

Each implementation will essentially end up providing the same interface through kubectl. Despite this, there are several options for running a Kubernetes cluster locally. Kubernetes is intended to be used with a cloud provider. This blog will assume you have the following tools installed and configured already: This blog will walk through some of the basics of Kubernetes, and will setup a simple microservice application locally to demonstrate its use. The idea is that you should be able to deploy in a very similar way whether running locally or in the cloud.

This means that it's a tool made to abstract away details such as separation of nodes, while automating things like rolling upgrades, failover, and scaling of services. Kubernetes is a platform for managing containerised services. This can incur in extra costs since the risk of losing requests becomes higher the longer a restart takes, so you're likely to want to have another instance to minimise that risk. You will still need to worry about instance startup time whenever introducing rolling upgrades or performing a failover. If you're using a monolithic architecture over microservices, you may not need to deal with communication between nodes and so won't experience these issues. Communicating between nodes in this architecture can be quite complex.Stopping and starting instances is slow.There are still problems with this architecture though, for example:

Docker and kubernetes architecture free#

This makes Kubernetes platform agnostic so that instead of Docker you're free to use other platforms with corresponding shims, such as CRI-O or KataContainers.Īutomatic scaling and failover are just two of the benefits provided by modern cloud platforms such as Amazon AWS, Microsoft Azure and Google Cloud Platform (GCP). These implementations are colloquially known as "shims". Kubernetes defines a Container Runtime Interface (CRI) that container platforms must implement in order to be compatible. Kubernetes is most commonly used with Docker managed containers, although it doesn't strictly depend on it.

0 kommentar(er)

0 kommentar(er)